Fixing `RuntimeError: tf.gradients is not supported when eager execution is enabled. Use tf.GradientTape instead.`

I was following a code sample to visualize convnet filters from Chollet's book. And because of TensorFlow 2's API change, the original code breaks telling us to use tf.Gradient.

Note that this is a work in progress. I have not managed to fix it yet.

Background

Originally, Chollet's piece of code uses Tensorflow Backend functions: K.mean, K.square, K.sqrt, K.function (link to the Colab notebook):

def generate_pattern(layer_name, filter_index, size=150):

# Build a loss function that maximizes the activation

# of the nth filter of the layer considered.

layer_output = model.get_layer(layer_name).output

loss = K.mean(layer_output[:, :, :, filter_index])

# Compute the gradient of the input picture wrt this loss

grads = K.gradients(loss, model.input)[0]

# Normalization trick: we normalize the gradient

grads /= (K.sqrt(K.mean(K.square(grads))) + 1e-5)

# This function returns the loss and grads given the input picture

iterate = K.function([model.input], [loss, grads])

# We start from a gray image with some noise

input_img_data = np.random.random((1, size, size, 3)) * 20 + 128.

# Run gradient ascent for 40 steps

step = 1.

for i in range(40):

loss_value, grads_value = iterate([input_img_data])

input_img_data += grads_value * step

img = input_img_data[0]

return deprocess_image(img)

The code produces this error:

RuntimeError: tf.gradients is not supported when eager execution is enabled. Use tf.GradientTape instead.

The error says to either enable some very old setting called eager execution, or use tf.GradientTape. The latest version of the Colab hasn't been updated to work with TensorFlow 2, so readers of the book have filed issues, with no answer:

- https://github.com/fchollet/deep-learning-with-python-notebooks/issues/134

- https://github.com/fchollet/deep-learning-with-python-notebooks/issues/143

First try with tf.Gradient

Using tf.GradientTape is a bit different, you have to feed your data to your model manually, then compute things. Instead of K.mean, etc. I tried using numpy's equivalent functions:

def get_convnet_display(convnet_layer, filter_index):

# gray image with some noise

input_image = np.random.random((1, 150, 150, 3)) * 20 + 128

with tf.GradientTape() as tape:

step = 1

for i in range(40):

# feed the input image to the model

model(input_image, training=False)

# compute the loss

loss_value = np.mean(convnet_layer.output[:, :, :, filter_index])

# compute the gradient relative to the input image

grads = tape.gradient(loss_value, [model.input])

grads /= np.sqrt(np.mean(np.square(grads))) + 1e-5

# gradient ascent on the input image

input_image += gradient * step

return input_image

But I got this error:

TypeError: Cannot convert a symbolic Keras input/output to a numpy array. This error may indicate that you're trying to pass a symbolic value to a NumPy call, which is not supported. Or, you may be trying to pass Keras symbolic inputs/outputs to a TF API that does not register dispatching, preventing Keras from automatically converting the API call to a lambda layer in the Functional Model.

What this means is I can't process model outputs directly as if they were numpy arrays. Replacing the computation of the loss by loss_value = K.mean(convnet_layer.output[:, :, :, filter_index]), it manages to evaluate the mean. When I print out my loss, I get:

KerasTensor(type_spec=TensorSpec(shape=(), dtype=tf.float32, name=None), name='tf.math.reduce_mean_4/Mean:0', description="created by layer 'tf.math.reduce_mean_4'")

But then the computation of the gradient fails with this error:

AttributeError: 'KerasTensor' object has no attribute '_id'

On tensorflow.org, there is a page dedicated to porting code from TensorFlow to TensorFlow 2. The paragraph about writing your own loops seems relevant: https://www.tensorflow.org/guide/migrate#write_your_own_loop. I'll try and use that to fix my own later.

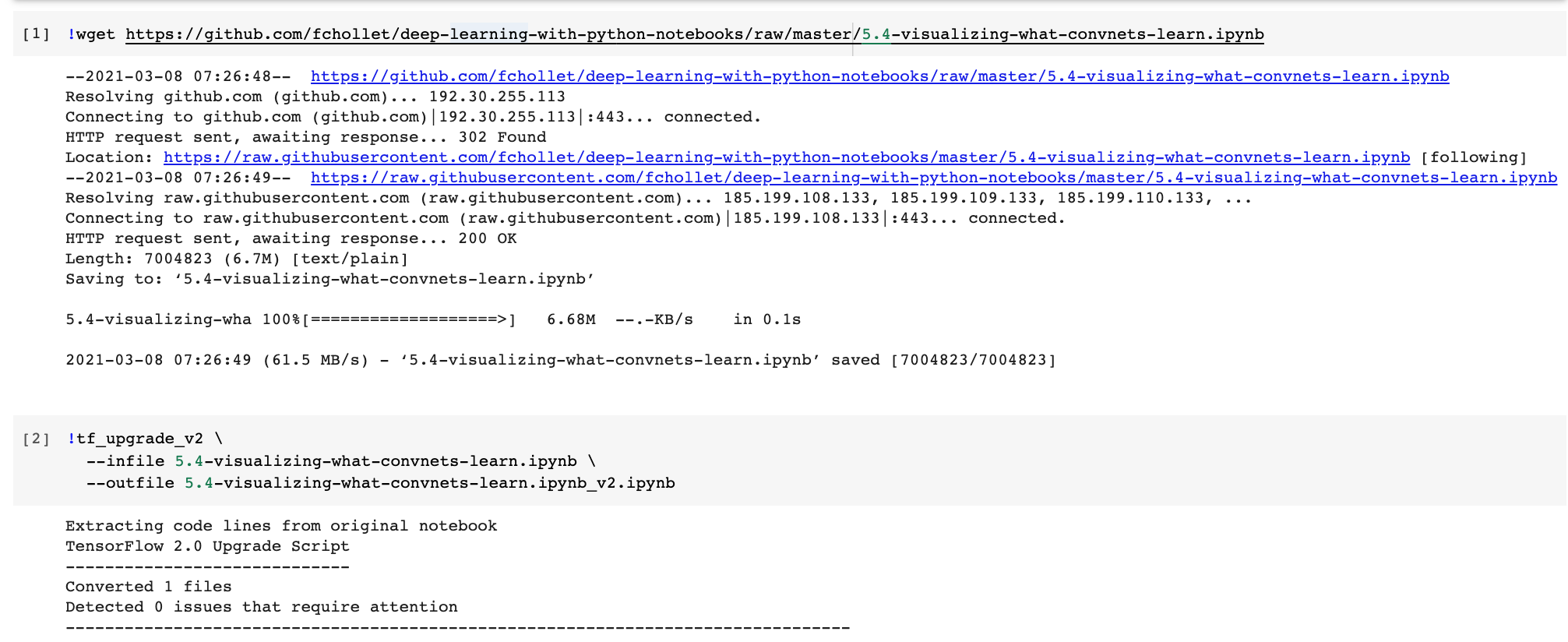

Trying out the converter

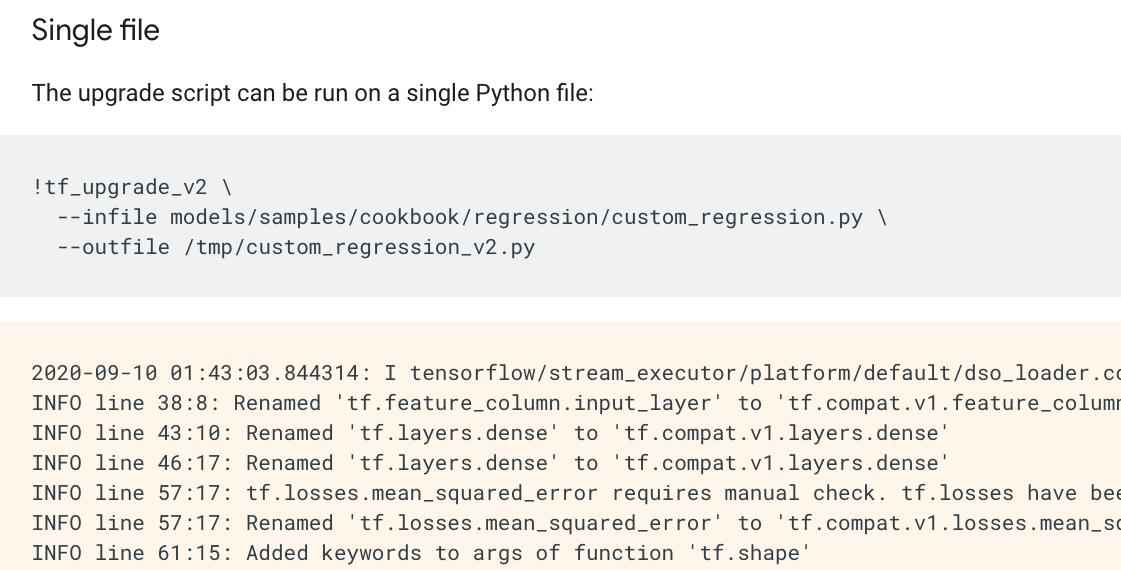

https://www.tensorflow.org/guide/upgrade provides a tool to automatically migrate code from TF1 to TF2. The example shows the logs of such a conversion:

I tried it on Chollet's notebook. But as you can see, it converted nothing.

So yeah, I really should just dig into the API and understand how to write my own loops. But it's way above my level right now.